In this post we focus on DevOps and how to apply this philosophy to Big Data projects with Cloud technology. Specifically, with one of the components of Google Cloud Platform: Cloud Build.

In general terms, DevOps aims at team cohesion to automate and ensure that all developments carried out on a Big Data platform reach the project stakeholders with the necessary guarantees: development, administrators, operations, business, etc.

Thanks to automation, we are able to dedicate time to the implementation of functionalities and business response, and not waste it on iterative tasks such as compiling, testing, uploading code with security and quality guarantees, as well as other functions that can be done by machines. Automation also reduces the chances of failure.

The main objective of DevOps philosophy is rapid deployment. Therefore, its purposes are:

- Increase the speed of software delivery.

- Improve the reliability of services

- Create shared ownership of software

- Develop software from the integration between developers and system administrators, so that developers can focus on developing and deploying their code in the shorter possible time.

- Reduce Time to Market

Cloud Build: the challenge of integration, deployment and continuous delivery in Big Data focused developments.

One of the best options for rapid deployment is Cloud Build, Google Cloud’s serverless service, which offers many advantages in CI/CD (Continuous integration – Continuous Delivery) processes.

Continuous integration, deployment and delivery are a reality and a necessity in today’s projects. But when we talk about Big Data, we are facing a major challenge because the essence of CI/CD is the complete automation of application deployment. Including security analysis, unit testing and end-to-end testing in environments where data is critical for testing, and therefore data volumes have a major impact.

With GCP Cloud Build we achieve this. This platform is capable of performing integrations and continuous serverless delivery.

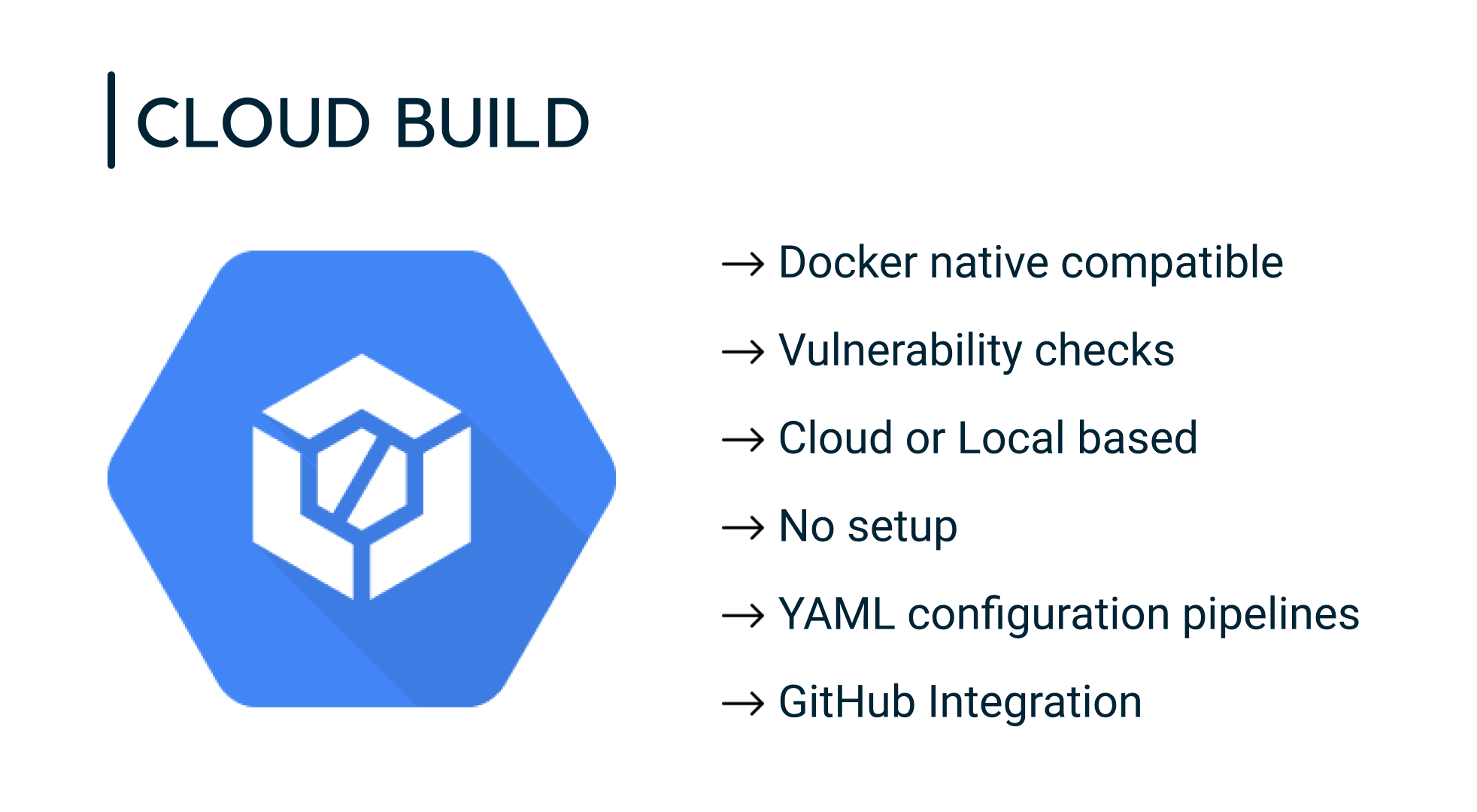

Cloud Build’s functionalities include:

- Enables vulnerability testing and analysis.

- Deploys in various environments and multi-cloud environments as part of the CI/CD processing flow.

- Facilitates rapid software development in any programming language.

- Full control of workflow definition to compile, test and deploy in different environments, such as virtual machines, serverless environments, Kubernetes or Firebase.

- Comprehensive security analysis as part of the CI/CD flow.

If you want to go deeper into Google Cloud Platform’s Cloud Build capabilities and discover, in a practical way and in greater detail, how to build the complete CI/CD cycle on a serverless platform for Big Data development, Sergio Rodríguez de Guzmán, CTO of PUE explains it to us in this video:

Why Google Cloud Platform for your Big Data project?

The experience and results obtained in ambitious and complex projects lead us to recommend Google Cloud Platform (GCP) as the best solution to integrate a Big Data project in the cloud.

The GCP ecosystem provides solutions to all the needs and milestones of a Big Data project. Migrate workloads securely and ensuring the quality of migration, tools for data ingestion, preparation and exploitation, integration of ETL processes, development of IoT projects, AI platforms, and perform deep analysis of data, among other capabilities and functions.

At PUE we can help you make your Big Data project in the Cloud a success. We are experts in Big Data and we have a deep knowledge and proven experience in the Google Cloud Platform ecosystem.

PUE is:

- Cloudera Platinum Partner, the highest level of recognition.

- Sell, Service and Training Partner of Google Cloud, with expertise in all categories.

Links of interest:

Our services in Google Cloud technology

Official Google Cloud training and certification

Contact us:

sales@pue.es